AutoChemplete

-

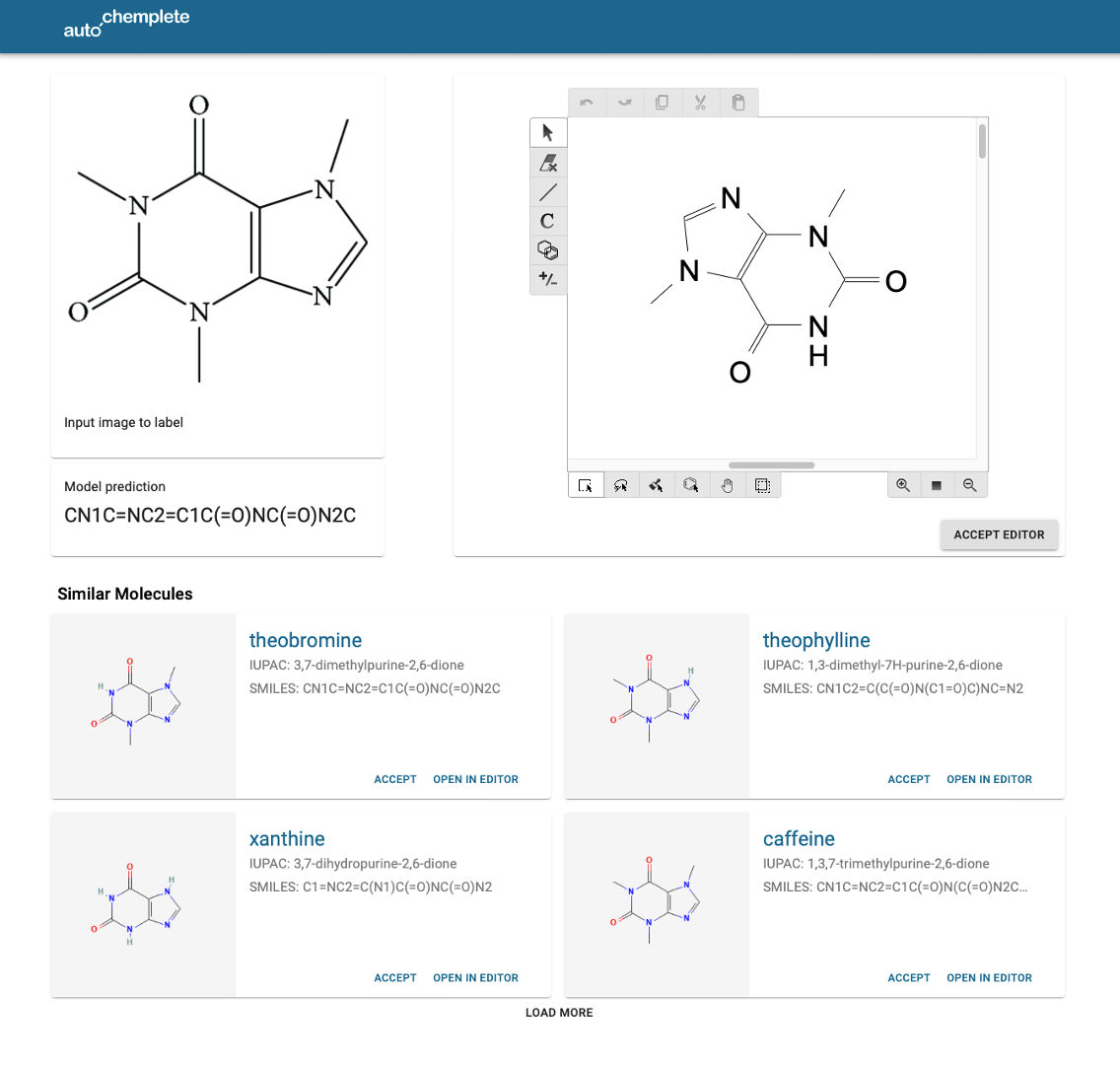

Blind and low-vision students in STEM subjects need specially labeled PDF documents (U/A compliant), so that they can read them with magnification, screen readers, or refreshable Braille displays. This labeling process is time consuming, and needs high domain expertise, especially for alternative texts or other accessible representations for images. In STEM many figures are not for illustrative purposes but contain important information, not conveyed in the text. Accessibility enablers often struggle to deliver labelers with the required domain expertise. Against this backdrop, we have elicited requirements for, developed, and evaluated AutoChemplete. It enables novices and experts alike to smoothly annotate images of structural chemical formulas in an easy way. We use state-of-the-art machine learning to produce a first suggestion. With a search in a chemical database we can query the solution space for the closest possible results and display them to the labeler for selection. From this, we can generate various accessible representations.