This project aims to develop an explainable interactive machine learning (ML) system. In doing so, the extensive knowledge from research on Explainable Artificial Intelligence (XAI) and (2) Interactive Machine Learning (IML) will be made accessible and integrated into the underlying system components. In the following, both research streams will be presented.

Explainable Artificial Intelligence (XAI)

ML is now increasingly entering the mainstream and supporting human decision-making (Wang et al., 2019). Because of their ability to learn, ML systems are changing how complex decisions are made (Ågerfalk, 2020; Kellogg, Valentine, and Christin, 2020). However, the effectiveness of these systems is limited by the machine's inability to explain its thoughts and actions to human users (Wang et al., 2019). The high complexity of some ML models leads to a lack of understandability of their decisions, which is a major problem (Dosilovic, Brcic, and Hlupic, 2018). In addition, new regulations, such as the European Union's General Data Protection Regulation (GDPR), emphasize the "right to explanation" of all decisions made (Ribeiro, Singh, and Guestrin, 2018; Cheng et al., 2019).

The field of XAI may offer a promising avenue to address these challenges. Diakopoulous et al. (2017) concluded that the goal is to "ensure that algorithmic decisions, as well as any data driving those decisions, can be explained to end-users and other stakeholders in non-technical terms."

Interactive Machine Learning (IML)

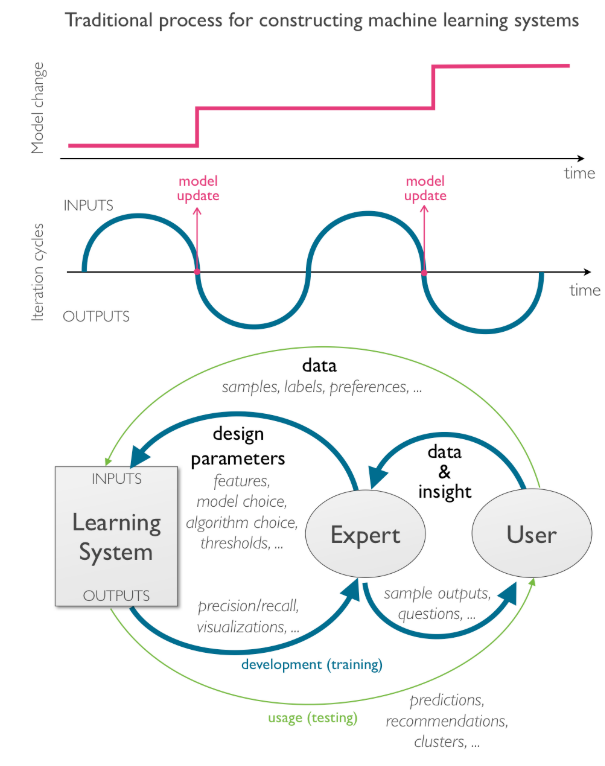

Another challenge is that the development of ML systems is largely reserved for experts. As a result, potential users of ML systems have limited involvement in the development process. Many of these potential human users are domain experts who could provide valuable feedback to improve the performance of these systems (Amershi et al., 2014). In the widely used traditional process for constructing ML systems, there is no direct way for users to interact with the system in training and refining the model. Instead, users interact with experts who translate user requirements and incorporate them into the model (see Figure 1). As a result, users cannot provide continuous feedback to the system and their ability to influence the resulting models is limited.

The concept of IML offers a promising way to address this challenge by allowing users to interact directly with the ML system (Porter, Theiler, and Hush, 2013). The goal is to engage users and allow them to iteratively refine the ML model by providing feedback, making corrections, and reviewing model results (Amershi et al., 2014).

Figure 1: Traditional approach to develop machine learning models (Amershi et al., 2014)

Project Goals

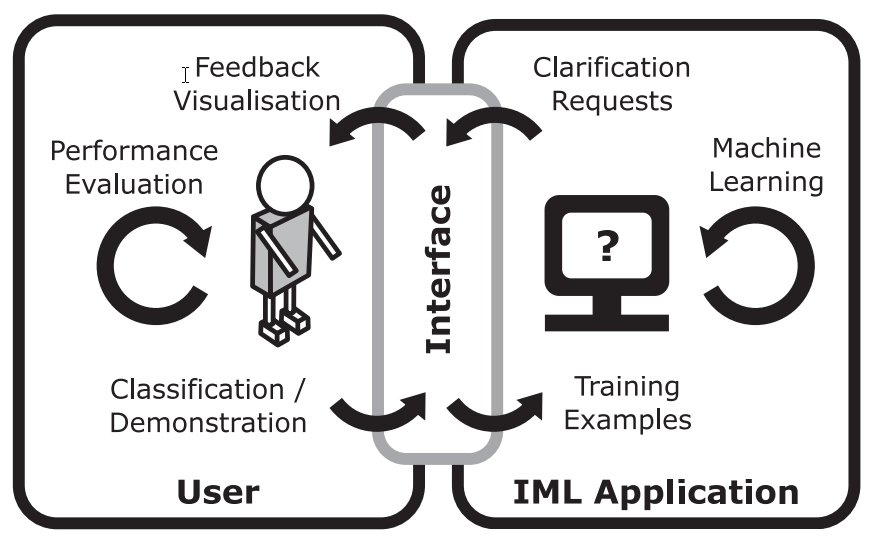

The goal of the project is to develop an explainable interactive ML system. This will involve laboratory and field experiments to evaluate the impact of XAI and IML approaches (see also Figure 2). Specifically, this project aims to increase our understanding of how domain-specific feedback mechanisms, interaction approaches, and explanation types affect ML model performance as well as user perceptions of the model. Overall, we aim to establish design guidelines for the development of explanable interactive ML systems.

Figure 2: The user refines the ML model by performing a series of iterative interactions in order to provide feedback to the system (Dudley and Kristensson, 2018)

Selected References

- Ågerfalk, P. J. (2020) ‘Artificial Intelligence as Digital Agency’, European Journal of Information Systems, 29(1), pp. 1–8.

- Amershi, S. et al. (2014) ‘Power to the People: The Role of Humans in Interactive Machine Learning’, AI Magazine, 35(4), pp. 105–120. doi: 10.1609/aimag.v35i4.2513.

- Cheng, H.-F. et al. (2019) ‘Explaining Decision-Making Algorithms through UI: Strategies to Help Non-Expert Stakeholders’, in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems - CHI ’19. New York, New York, USA: ACM Press, pp. 1–12. doi: 10.1145/3290605.3300789.

- Diakopoulos, N. et al. (2017) ‘Principles for Accountable Algorithms and a Social Impact Statement for Algorithms’, FAT/ML. Available at: https://www.fatml.org/resources/principles-for-accountable-algorithms (Accessed: 20 April 2020).

- Dosilovic, F. K., Brcic, M. and Hlupic, N. (2018) ‘Explainable artificial intelligence: A survey’, in 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics, MIPRO 2018 - Proceedings. Institute of Electrical and Electronics Engineers Inc., pp. 210–215. doi: 10.23919/MIPRO.2018.8400040.

- Dudley, J. J. and Kristensson, P. O. (2018) ‘A Review of User Interface Design for Interactive Machine Learning’, ACM Transactions on Interactive Intelligent Systems. ACM, 8(2), pp. 1–37. doi: 10.1145/3185517.

- Kellogg, K. C., Valentine, M. A. and Christin, A. (2020) ‘Algorithms at work: The new contested terrain of control’, Academy of Management Annals. Academy of Management, 14(1), pp. 366–410. doi: 10.5465/annals.2018.0174.

- Porter, R., Theiler, J. and Hush, D. (2013) ‘Interactive machine learning in data exploitation’, Computing in Science and Engineering, 15(5), pp. 12–20. doi: 10.1109/MCSE.2013.74.

- Ribeiro, M. T., Singh, S. and Guestrin, C. (2018) ‘Anchors: High-Precision Model-Agnostic Explanations’, in Thirty-Second AAAI Conference on Artificial Intelligence. Available at: www.aaai.org (Accessed: 11 December 2019).

- Wang, D. et al. (2019) ‘Designing theory-driven user-centric explainable AI’, in Conference on Human Factors in Computing Systems - Proceedings. New York, New York, USA: Association for Computing Machinery, pp. 1–15. doi: 10.1145/3290605.3300831.